- Published on

2024 Wrapped: Learnings and Takeaways

- Authors

- Name

- Swapnil Bhatkar

- @swapnilbhatkar7

Welcome to the first blog of my personal writing expedition! Over the past few years, I’ve diligently maintained a daily gratitude journal, but this marks the first time I’m sharing my thoughts publicly.

The purpose of this space is to document my learnings, reflect on personal and professional growth, and provide a snapshot of where I am today versus where I aspire to be. It feels fitting to title this inaugural post: "2024 Wrapped: Learnings and Takeaways"

Let’s dive in.

- Kicking Off 2024: HPC and Google's Gen AI

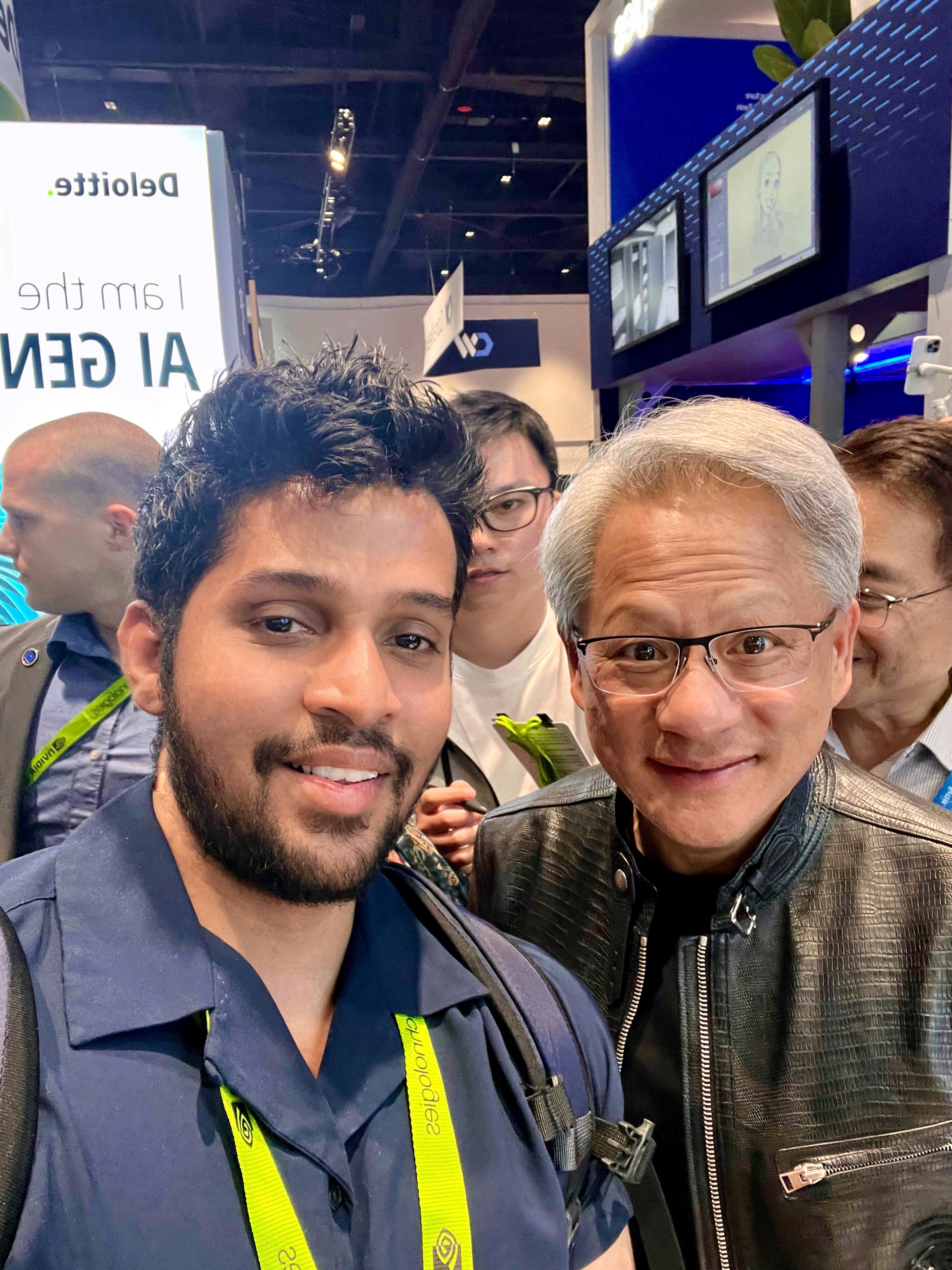

- March: NVIDIA GTC and fanboy moment with Jensen Huang

- April: Google Cloud Next

- May: NREL Energize Forum and DOE Fusion

- August: AWS certs renewal

- December: AWS reInvent and Streamlining Multi-Cloud Infrastructure

- Looking Ahead: 2025 Predictions

Kicking Off 2024: HPC and Google's Gen AI

The year kicked off with organizing a two-day workshop featuring HPC and Generative AI specialists from Google Cloud. Our focus was to introduce NREL’s research teams to Google’s Gemini 1.0 Pro and Flash, large language models that were still in preview back then (classic Google!). At the time, many of us still referred to it as "Bard", Gemini's predecessor, which now feels like ancient history in the fast-paced AI landscape.

What truly impressed me was their seamless integration with the broader Google ecosystem - Vertex AI, GKE, and Google Cloud Storage. Google Cloud's Model Catalog emerged as a standout feature, positioning them ahead of other major cloud providers. The ability to deploy open-source models for text, vision, and speech directly from Hugging Face with a single click, hosting them on Vertex AI endpoints or GKE clusters as a service, was a game-changer for our workflows.

Day two spotlighted the HPC Toolkit, an open-source tool for building HPC clusters on GCP using Terraform. Unlike AWS ParallelCluster, which relies on YAML configurations, Terraform felt refreshingly modular. It aligned perfectly with our team's infrastructure-as-code practices. This compatibility allowed us to leverage our existing private registry modules for core infrastructure components like VPC, subnets, and IAM

March: NVIDIA GTC and fanboy moment with Jensen Huang

In March, I attended my first NVIDIA GTC in San Jose, California. Jensen Huang’s keynote was electrifying—when was the last time a tech CEO got a rockstar entrance? His announcements, including the Blackwell GB200 superchip, Project GR00T, and NVIDIA NIM microservices.

NOTE

Meeting Jensen Huang in the expo hall was a fanboy moment I'll never forget. His down-to-earth personality and genuine passion for science shone through in our brief interaction, even as I snapped a selfie with him in his iconic black jacket.

The expo hall's Edge AI demonstrations revealed something crucial: Chinese and Taiwanese startups are significantly ahead in hardware, electronics, and manufacturing. Their positioning in the AI, supercomputing, and energy sectors is remarkably strong.

Inspired, I picked up an NVIDIA Jetson Orin Nano Developer Kit to tinker with quantized open-source models. Installed a 1 TB NVMe SSD drive to speed up model loading from storage to memory. After several attempts, I was able to flash my SSD with NVIDIA SDK manager to install Jetpack 6 DP

April: Google Cloud Next

In April, I attended my 2nd Google Cloud Next conference in Las Vegas at Mandalay Bay. Again, the theme was around Large Language models. One thing that stood out to me was how Google embedded their premium models in almost every major cloud product and service they built. Not just cloud but even their other flagship products like Search, Photos and Maps

A standout demo featured their solar APIs for rooftop manufacturers, leveraging satellite and aerial imagery. This inspired me to build a side project: a demo where users could upload their energy bills, have an LLM parse the data into a structured format, and receive energy recommendations based on geolocation, consumer behavior, and available federal programs. More details about this project will be made available soon on the blog

Workshops and sessions on hosting open-source LLMs on GKE clusters using vLLM and TensorRT-LLM frameworks particularly caught my attention. I researched about it a lot especially concepts like quantization, KV cache and speculative decoding. The vLLM framework impressed me with its ease of setup, OpenAI API compatibility, and documentation for NVIDIA GPU tensor parallelism implementation. This proved especially valuable given the release of 17 different open-source LLMs just in April alone, including the big launch of Llama 3.

May: NREL Energize Forum and DOE Fusion

In May, I presented at NREL Energize forum for the 1st time ever. It is an internal NREL forum that brings together researchers from all groups to showcase their projects. Our team built a prototype of Live Llava models developed by MIT HAN lab running on device locally without internet connectivity. Inspired by the original idea from Jetson AI lab, we streamed a live camera feed over the local network with Web RTC. Behind the scenes, the vision language model studies the frames coming from the video stream and then responds back to the user based on the prompts that we give in real time. The audience loved it, and it highlighted our team’s capabilities in IoT, AI/ML, and edge computing.

I gave a talk at the NREL Energize Forum, discussing the state of LLMs at NREL and how we can help researchers across the lab to build their own AI powered agents, knowledge base, RAG applications using models hosted in our multi-cloud environments especially Azure Open AI, GCP Vertex AI and Amazon Bedrock

I gave a talk at DOE Fusion, an invite-only conference for 17 US national laboratories in partnership with AWS. This year, the event was hosted at NREL. I spoke about fine-tuning and inference strategies for open-source LLMs, delving into Q-LoRA, domain specific datasets from NREL, AWS SageMaker for training jobs and Kubernetes for inference server deployment. I would like to continue in this direction to further build my skills in quantization, model distillation, and benchmarking with different LLM inference frameworks

August: AWS certs renewal

August brought a significant milestone: successfully renewing my AWS Solutions Architect Professional certification. My preparation strategy combined traditional resources with cutting-edge AI tools. While AWS's official documentation and Udemy practice tests formed the foundation, I found myself turning to AI assistants like Claude and Perplexity for deeper dives into complex scenarios. Perplexity's ability to access real-time internet resources proved particularly valuable for understanding emerging AWS services and architectural patterns. To my surprise (and relief), scoring 80% felt particularly rewarding given the exam's reputation for complex, scenario-based and notoriously tough questions. It was a positive experience, not just for the certification itself, but for reinforcing the foundational knowledge that has been instrumental in shaping my career since landing my current role in 2021.

December: AWS reInvent and Streamlining Multi-Cloud Infrastructure

December brought me back to Las Vegas for AWS re:Invent, marking my sixth in-person attendance (seventh overall) at this cornerstone tech event. The standout moment came during Peter DeSantis's keynote, where he unveiled AWS's Trn2 UltraServers – the future training ground for Anthropic's next-generation models. This announcement underscored a fascinating trend: all three major cloud providers are now investing heavily in custom silicon, reducing their dependence on NVIDIA GPUs and their notoriously long procurement cycles.

As any re:Invent veteran knows, the conference turned the Vegas Strip into a sprawling tech campus, with workshops spread across multiple hotels. I found myself ping-ponging between venues, diving deep into sessions on HPC, Kubernetes, SageMaker, and IoT services. It was like a high-stakes game of technical hopscotch – minus the gambling, though the odds of making it to all my selected sessions on time felt about as challenging as hitting a royal flush.

Our team provides research infrastructure on all 3 major cloud providers to all research teams at NREL. That includes access to state-of-the-art models from Open AI, Google and Anthropic via Azure Foundry, Google Cloud Vertex AI and Amazon Bedrock respectively. Since most of them want to try out new models for their respective use cases, there was no easy way to setup API endpoints, IAM policies, guardrails and cost usage on a per-team basis. Configuring everything manually across three cloud providers is tedious, error-prone, and time-consuming, error prone and time consuming. But one tool that binds them together is Terraform.

I built, test and published a terraform module for our private cloud registry, addressing the challenge of managing research infrastructure across multiple cloud providers. The module streamlines:

- Azure OpenAI service setup, including GPT model deployments, team-specific resource groups, enterprise firewall policies, and private DNS

- AWS configurations for SSO groups in Azure Entra, least-privilege IAM policies for Anthropic and Meta model access, and detailed cost tracking with application inference profiles in Amazon Bedrock

- Google Cloud landing zone deployment, covering project setup, billing configuration, storage management, Vertex AI API enablement, and SSO-based IAM groups

Looking Ahead: 2025 Predictions

As we move into 2025, I anticipate several exciting developments: open-source AI models becoming mainstream, the rise of AI agents, domain-specific SLMs, and tiny VLMs running on edge devices. I expect inference costs to decrease, making these technologies more accessible than ever. This year has been an incredible journey at the intersection of AI and energy research. I'm grateful for these experiences and excited to continue sharing my learnings on this platform.

Here’s to the journey ahead!